What Are Neural Networks And How Are They Changing Your Life?

Does your smartphone answer questions?

Does Google Images recognize what’s in any photo?

If the answer is yes, it’s because these applications use Neural networks. No miracles, no magic. Miracles and magic would be a lot faster for me to explain.

In our new blog article, we will focus exactly on the fascinating world of neural networks.

It’s not exactly easy stuff. I mean, eating sushi is definitely easier. But we will try to be as much newbie-friendly as possible, don’t worry!

Why are Neural networks so amazing?

Artificial neural networks are computing systems that learn to perform tasks by considering examples, generally without being programmed with task-specific rules.

They represent an important group of algorithms for Machine learning, both supervised and unsupervised (especially the first), and were born to emulate the functioning of the human brain.

Obviously, it was not possible to create such a complex structure, but the results achieved to date are still amazing.

The strength of Neural networks

While simple models like Linear regression can be used to make predictions based on a small number of data features, neural networks can deal with giant sets of data and with many features!

For example, in image recognition, they can learn to identify images of cats by analyzing samples-pics that have been manually labeled as “cat” or “no cat” and using the results to identify cats in other images.

They do this without any prior knowledge of cats. Instead, they automatically generate identifying characteristics from the samples that they already processed.

A little bit of history about Neural networks

The first ideas for artificial neural networks date back to the 1940s. The main concept was that a network of interconnected artificial neurons could learn to recognize patterns in the same way as a human brain.

The basic constituent on which the numerous neural network models are based is, indeed, the artificial neuron proposed by W.S. McCulloch and Walter Pitts in 1943.

This neuron schematized a combiner with multiple binary data as input and a single binary data as output.

A sufficient number of such elements, connected in such a way as to form a network, was able to calculate simple functions.

The introduction of Perceptrons

In 1958, Frank Rosenblatt introduced the first neural network scheme, called Perceptron.

This was the ancestor of current neural networks, and could recognize and classify forms in order to provide an interpretation of the general structure of biological systems.

Rosenblatt’s probabilistic model was therefore aimed at the analysis, in mathematical form, of functions such as the storage of information, and their influence on pattern recognition.

The perceptron really constituted a decisive progress compared to the binary model of McCulloch and Pitts, because its synaptic weights were variable and therefore the perceptron was able to learn!

“The first ideas for artificial neural networks date back to the 1940s. The main concept was that a network of interconnected artificial neurons could learn to recognize patterns in the same way as a human brain.”

The structure of Neural networks

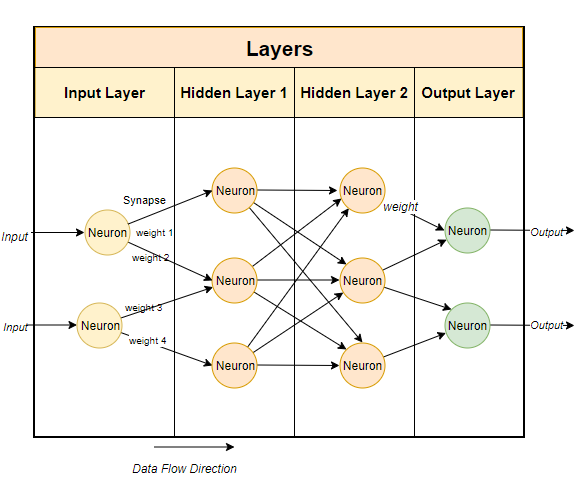

The neural networks’ structure is clearly inspired by that of the brain. It consists of interconnected layers of units, called artificial neurons, which send data to each other through connections called edges.

The output of the preceding layer will be the input of the subsequent layer.

The first and the last layer of the network are called respectively input layer and output layer. Each layer between these is known as a “hidden layer”.

Signals travel from the input layer to the output layer, possibly after traversing them multiple times. Every layer takes care of recognizing different features of the overall data.

I wrote “layer” a thousand times. I will see the layers in my dreams. Or nightmares.

Neural networks and kitties

Think about the apps which can recognize among different cats races in a pic through your smartphone camera.

The first hidden layer in the neural network could identify the size of the animal in the image. The second layer, instead, would be able to recognize the shape of the body.

The third one could take care of the fur’s color, etcetera…

This carries on through to the final layer, which will output the probability that the cat is a proud member of a specific race.

Personally, I hope for Thai.

I LOVE Thai cats.

Something similar happens with Google Lens, which can recognize practically anything.

You know? I’m an Italian boy working in Warsaw

The main language here is Polish.

Well, I always ask for the Polish menu even if my Polish is terrible just to play with Google Lens.

In this way, I have fun translating the text in real-time, and I pretend to know the language, just to make a good impression! >:D

“The neural networks’ structure is clearly inspired by that of the brain. It consists of interconnected layers of algorithms, called neurons, which send data to each other.”

Training process, Weights and Back-propagation

The system learns to recognize every characteristic of our cat during the training process, gradually correcting the importance of the data that flows between the levels of the network.

This is possible because an attached “weight” is associated with each link between layers, the value of which can be increased or decreased to alter the importance of that link.

At the end of each training cycle, the system will examine whether the final output of the neural network approaches or moves away from the expected result.

For example, if the net is improving or worsening in identifying the cat breed.

To reduce the gap between the actual output and the desired output, the system will operate backwards through the neural network, altering the weights connected to all these links between the levels, as well as an associated value called a bias.

This process is called back-propagation.

Types of Neural networks

Neural networks are divided into many categories, with different strengths and weak points but also with different uses.

Let’s see some of them!

1/ Recurrent neural network

Commonly used for text-to-speech conversion. It is a type of artificial neural network in which the output of a particular layer is saved and sent back to the input, helping to predict the outcome of the layer. For example, the most likely next word in a speech.

Each node acts as a memory cell while computing and carrying out operations. Thanks to this, the neural network remembers the information it may need to use later.

2/ Feedforward neural network

One of the simplest networks. In fact, it can have just one layer. Unlike in more complex types, there is no backpropagation, and data moves in one direction only.

On the other hand, it is easy to maintain and able to deal with data that contains a lot of noise.

So, it’s used in technologies like face recognition and computer vision, where target classes are hard to classify.

Sequence to sequence model

It consists of two recurrent neural networks. An encoder processes the input and a decoder processes the output.

They can do so using the same or different parameters.

This model is particularly applicable where the length of the input data is not the same as the length of the output data.

For this reason, it is used in chatbots and question answering systems.

3/ Convolutional neural networks

A CNN contains one or more convolutional layers.

These layers can either be completely interconnected or pooled.

Before passing the result to the next layer, the convolutional layer uses a convolutional operation on the input that makes the network much deeper but with much fewer parameters.

Because of this, it’s probably the most well-suited for image recognition and in agriculture, where weather features are extracted from satellites to predict the growth of a field.

Neural networks and Supervised learning

Today, almost all the highly successful neural networks use the supervised training approach.

Among them, we can find FFNN, RNN, LSTM, FFNN, CNN, GAN, and U-Net.

You don’t have to remember all these acronyms. It’s just to prove my point and to impress you, ok?

The only frequently used neural network connected to unsupervised learning is Kohenon’s Self Organizing Map (KSOM), which is applied for clustering high-dimensional data.

In case you never heard about supervised or unsupervised learning, you can get a more precise idea by reading our previous article on the different categories of machine learning.

Supervised and Unsupervised learning

If, on the other hand, you are too lazy, or you have just broken all your fingers and cannot click on the link, we will summarize everything by saying that, in Supervised learning, we teach machines by example.

In this approach, we feed our computer using previously classified examples, knowing that there is a relationship between the input and the resulting output.

The machine will recognize the connection between them, and process a function that mathematically represents it.

In contrast, Unsupervised learning is used with data that hasn’t been previously classified by humans into different categories.

We use this system to explore the data and identify any internal structures or patterns.

The machine will identify groups, and find out for itself relationships or similarities in the data to split them into different categories and groups, known as clusters.

“Today, almost all the highly successful neural networks use the supervised training approach.”

Conclusions

My friends, that’s all for today.

I hope you enjoyed the article!

You can find other interesting stuff about Machine learning and AI in our blog.

I know that it’s interesting because i wrote it.