Artificial Intelligence VS Bad Guys: Using Algorithms to Moderate Social Networks

The internet is a nasty place. Well, beautiful and nasty at the same time.

Like a Lego Store full of incredible toys sold at exorbitant prices.

But without porn. No porn in Lego Stores.

As the amount of data uploaded by online platforms users continues to increase, traditional human-led moderation approaches are no longer sufficient to stem the consequent tide of toxic content.

To face the challenge, many of these platforms have been boosted with moderation mechanisms based on AI, specifically on machine learning algorithms.

A necessary premise

I will not particularly focus on the technical aspects of artificial intelligence. You can find anything you need to know about this topic in my previous articles on machine learning and deep learning.

Instead, I’ll show you the main ways these algorithms are applied in online environments to keep bad guys at bay.

Are you ready? Let’s start!

How does AI moderation work?

AI online content moderation is essentially based on machine learning, which allows computers to make decisions and predict results without being explicitly programmed to do so.

This approach is extremely data-hungry, because our system needs to be trained with large datasets to improve its performances and fulfil its tasks properly.

In recent times, machine learning systems experienced a massive breakthrough thanks to the introduction of deep neural networks that enabled an additional step forward: the so-called deep learning.

Deep learning allowed systems to recognize and manage complex data input such as the human speech or Dream Theater solos (those are probably too much even for a deep neural network).

Pre and post moderation

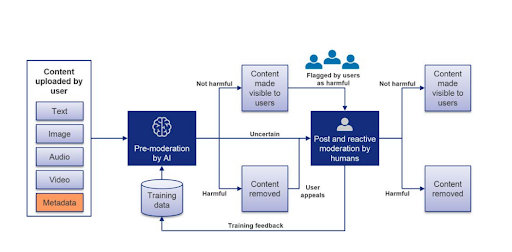

AI usually takes care of online moderation in two different phases. The first one, called pre-moderation, is fulfilled before the content’s publication, and it’s almost an exclusive task of automated systems.

Post or reactive-moderation, instead, happens after a content has been published and it has been flagged by users or the AI itself as potentially harmful. In other cases, the content may have been removed previously but needs an additional review upon appeal.

Main phases of AI moderation. Source: Cambridge consultants

AI pre-moderation

AI is commonly used to boost the pre-moderation accuracy by flagging content for human review thanks to many different techniques.

Hash matching, for example, compares the fingerprint of an image with a database of known harmful images, while keyword filtering recognizes potentially offensive words and allows flag bad content.

Talking about visual materials, object detection and scene understanding are effective methods to moderate complex image and video content.

Recurrent neural networks gave an incredible boost to the analysis of video content, allowing to consider not only the single frames, but also their relations and so the entire context.

Check the bad guy first

AI techniques can also be used to detect naughty users and prioritise their content for review.

Because a leopard cannot change its spots, right?

This approach focuses on metadata such as a user’s history, his/her followers and friends, infos about the user’s real identity, and so on.

AI post-moderation

AI is able to support human moderators during the post-moderation phase at least in two ways. Firstly, it helps to increase their productivity by prioritising content with higher levels of harmfulness.

For example, pineapple on pizza is clearly harmful, but less than bullying against children.

A second tool is the ability of AI to reduce the negative effects of content moderation on real moderators. Something like psychological cyber support.

Systems can limit exposure to disturbing content by blurring out parts of images which the moderator can manually disable just in case of need.

Another technique is the visual question answering, in which humans can ask the computer questions about the content to determine its level of harmfulness before viewing it.

Encouraging positive engagement with AI

In addition to active forms of moderation, artificial intelligence can also play the role of educator.

In fact, AI-based systems help maintain a positive online environment by discouraging users from posting potentially harmful content.

This approach is based on the concept of the “online disinhibition effect”, for which anonymity and asynchronicity of interactions make users less empathetic and more… well… a**holes.

We can mitigate this effect with artificial intelligence by using automatic keyword extraction to detect malicious messages.

After that, the system can force users to rephrase in a less controversial way.

Another useful tool is represented by chatbots, which can encourage users to maintain a positive attitude by automatically warning them in case of offensive posts.

Improving training data with AI

It will sound a bit weird, but online platforms use AI to create even more harmful content.

Does it mean that social network developers got crazy? Nah, they do it for a good reason.

As we said before, machine learning systems require huge datasets to be trained properly. AI can boost this process by synthesising training data with specific tools to improve pre-moderation performance.

For example, generative adversarial networks or GANs can be used to create harmful content such as violence or nudity and feed machines with it to train AI-based moderation systems.

GANs are also very useful to improve small datasets dedicated to under-represented minorities.

AI against fake accounts

Sometimes, the best solution is to erase the author of the content instead of the content itself.

That’s the case of fake accounts, tools of an extremely profitable business based on the spread of spam, malware, phishing, and so on.

The scale of this problem is quite impressive. Just in 2019, Facebook took down something like 2 billion fake accounts per trimester.

Facebook vs fake profiles

Talking about Facebook, the struggle against fake accounts is largely based on machine learning and is carried out both before and after their activation.

Facebook’s new machine learning system is known as Deep Entity Classification (DEC) and makes extensive use of neural networks.

It was developed to differentiate fake users from real ones based on their connection patterns and other deep characteristics like the age and gender distribution of the user’s friends.

In an early stage of training, the system decides whether users are real or fake based on a large number of low-precision machine-generated labels. Next, the model is fine-tuned with a smaller range of accurate and manually labelled data.

After the implementation of the DEC, Facebook was able to limit the amount of fake accounts on the social network to 5% of monthly active users.

Sounds sweet, uh? Unfortunately, with 2.5 billion monthly active users, 5% means 125 million fake accounts.

Not so sweet anymore, i guess.

“Just in 2019, Facebook took down something like 2 billion fake accounts per trimester.”

AI moderation in social networks

Facebook isn’t the only platform massively leveraging AI moderation.

Let’s find out some recent solutions implemented by other social networks to maintain a pleasant and friendly environment.

Basically what I try to do when I bring Nutella cookies to our office.

AI moderation in LinkedIn

The professional platform has an automatic fake account and abuse detection system, which allows it to “take care” of more than 20 million fake profiles in the first 6 months of 2019.

The first approach followed by LinkedIn detected sets of inappropriate words and phrases known as blocklist. When they appeared in some account, it was marked and erased.

Unfortunately, it required a lot of manual work for the evaluation of words or phrases in relation to the context.

Lately, a new approach has been introduced to mitigate such issues. It’s based on a deep model that was trained with a set of accounts already labelled as appropriate or inappropriate (and consequently removed from the platform)

The system is powered by a Convolutional Neural Network (CNN), which is particularly efficient in managing images and texts classification tasks.

AI moderation in… Tinder!

I know that I just caught your attention. And it’s not a clickbait. I’m serious!

Tinder relies on machine learning to punish maniacs scan potentially offensive messages.

When the system detects a controversial message, Tinder asks the receiving user for a confirmation of the potential offense and directs him/her to its report form.

Tinder’s developers trained its machine-learning model on a wide set of messages already reported as inappropriate.

In this way, the algorithm recognized keywords and patterns that could help it recognize offensive texts.

AI moderation in Instagram

Instagram introduced several improvements to boost its text and image recognition technology.

This allowed, for example, to detect and delete 40% more content related to suicide and self-harm.

We need AI moderation now more than ever

I’ve already said so and I’ll repeat it.

The data flow shared between users is simply not manageable by humans anymore. At this point, the contribution of artificial tools to assist real moderators is essential.

But that’s not the only issue that is acting as a catalyst for the research of new solutions.

Another factor to consider is the growing use of fake accounts and other AI-based tricks to influence politics.

An example? Reports showed that fake accounts and bots represent 15% of Twitter users.

In 2018, Twitter declared to have canceled 50,000 fake accounts likely created to influence the 2016 presidential election.

According to estimates, their posts reached about 678,000 Americans.

COVID… again

More recently, the global health crisis has made it necessary to increase the use of AI moderation techniques.

YouTube has opted to rely more on AI to moderate videos during the pandemic because many human reviewers have been left home to limit the COVID spread.

Facebook has done the same, announcing in March its intention to send home its content moderators, many of whom were workers of newly quarantined Philippines-based outsourcing companies.

So, fewer people eliminating toxic content and more people sitting at home, willing to fight boredom by spamming nonsense on the internet.

Sounds cool.

When solutions bring more troubles

At the same time, this crisis also showed artificial intelligence flaws in handling tasks previously managed (at least in part) by humans.

In fact, the day after Facebook’s announcement on AI content moderation, users were already complaining about arbitrary errors and blocking of posts and links marked as spam.

Unfortunately, the list of problems related to AI moderation is still quite long. Let’s see some issues in this regard.

Flaws and biases of AI moderation

I started this article on artificial intelligence with the cool stuff. I’m gonna end it with bad things. Like a twisted fairy tale without a happy ending.

The point is that AI moderation is still far from perfection.

A first reason is that identifying harmful content often requires an understanding of the context around it, to determine whether or not it is really dangerous.

This can be a challenging task for both human and AI systems because it requires a general understanding of cultural, political and historical factors, which vary widely around the world.

Pasta with ketchup can be fine for unforgivable heretics some people. But it’s definitely controversial and harmful content for an Italian audience.

Rightly, I would say.

Moderating the “worst” content formats

Another issue concerns the fact that online content appears in many different formats, some of which are pretty difficult to analyse and moderate.

For example video content requires image analysis over multiple frames combined with audio analysis, while memes need a combination of text and image analysis, plus a bit of cultural and contextual understanding.

Even harder is the moderation of live content such as streaming and text chats, which can escalate quickly into a mess and must be scanned and moderated in real time.

The problem of AI biases

If humans are biased, machines made by humans are biased too

Systems can be biased based on who builds, develops and uses them. This issue is known as algorithmic bias and it’s really hard to figure out, since this technology usually operates as a black box.

In fact, we never know with absolute certainty how a specific algorithm was designed, what data helped train it, and how it works.

Regarding this, an interesting study has revealed that by training artificial intelligence with what humans have already written on the Internet, the system would produce bias against blacks and women.

Solving all these flaws will be the future challenge of AI developers.

Meanwhile, “enjoy” the flame!